What are Mechanical Measurements?

Mechanical Measurements

The science of measurement is known as metrology. Measurement is done to determine whether the component that has been manufactured is as per the requirements.

Measurements will be of main length, mass, time, angle, temperature, squareness, roundness, roughness, parallelism, etc. For measuring any quantity there must be some unit to measure and express.

Measurement is defined as the process or the act of measurement. It consists of obtaining a quantitative comparison between a predefined standard and a measurement of unknown magnitude.

Terms Used in Mechanical Measurements

- Readability

- Least Count

- Range

- Sensitivity

- Repeatability

- Hysteresis

- Accuracy

- Precision

- Resolution

- Threshold

- Reproducibility

- Calibration

- Traceability

- Response time

- Bias

- Inaccuracy

Readability

This term indicates the closeness with which the scale of the instrument may be read. For example, an instrument with a 30 cm scale will have a higher readability than an instrument with a 15 cm scale.

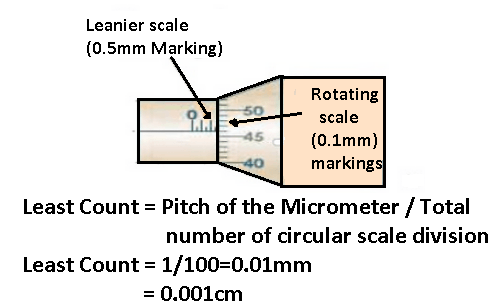

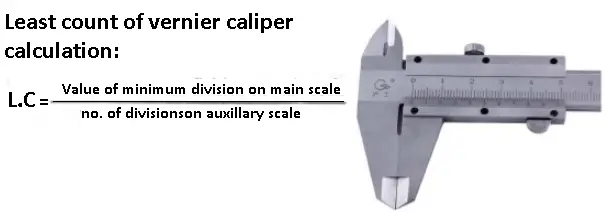

Least Count

It is the smallest difference between two indications that can be detected on the instrument scale. or in other words, it is the least value that can be measured with that particular device.

Least Count of Vernier Caliper:

Range

It represents the least value and largest value that can be measured using that instrument.

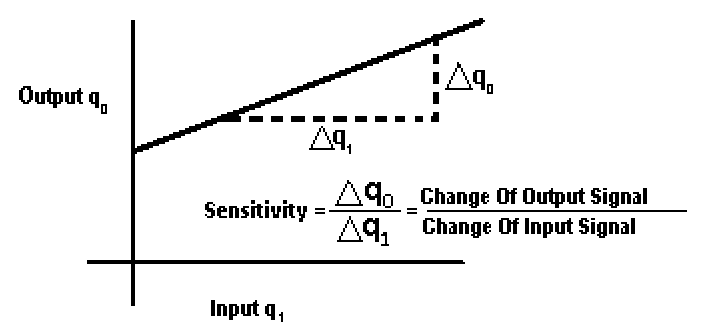

Sensitivity

It is defined as the ratio of the linear movement of the pointer on the instrument to the change in the measured variable causing this motion.

The sensitivity of an instrument should be high and the instrument should not have a range greatly exceeding the value to be measured. However, some clearance should be kept for accidental overloads.

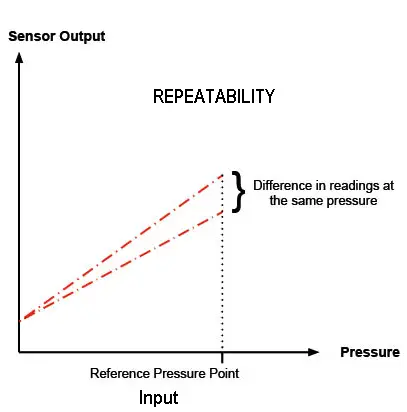

Repeatability

Repeatability is the ability of the measuring instrument. to repeat the same results during the act of measurements for the same quantity is known as repeatability.

In other words.

If an instrument is used to measure identical input many times at different time intervals, the output is not the same but shows a scatter. This scatters or deviation from the idea’s static characteristics, in absolute units or a fraction of the full scale, is called repeatability error, as shown in Fig below.

Hysteresis

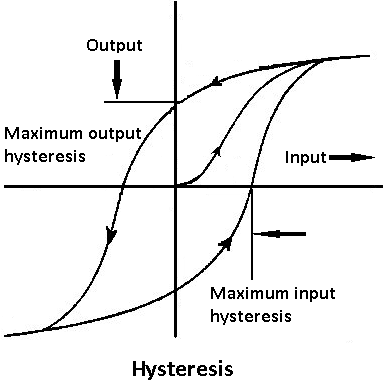

It is the difference between the indications of a measuring instrument when the same value of the measured quantity is reached by increasing or decreasing that quantity.

The phenomenon of hysteresis is due to the presence of dry friction as well as to the properties of elastic elements. It results in the loading and unloading curves of the instrument being separated by a difference called the hysteresis error. It also results in the pointer not returning completely to zero when the load is removed.

Hysteresis is particularly noted in instruments having elastic elements. The phenomenon of Hysteresis in materials is due mainly to the presence of internal stresses. It can be reduced considerably by proper heat treatment.

Accuracy

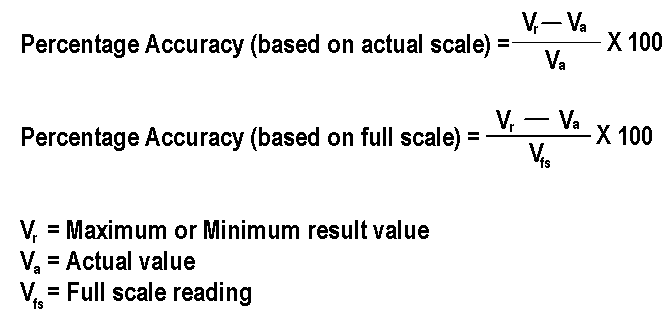

The accuracy of an instrument deviation of the reading from a known input. In other words, accuracy is the closeness with which the readings of an instrument approach the true values of the quantities measured.

It is expressed in percentage, based on either actual scale reading or full-scale reading as below:

Precision

The precision of an instrument indicates its ability to reproduce a certain reading with a given accuracy. or in other words, it is the degree of agreement between repeated results.

Precision refers to the repeatability of measuring process i.e., The closeness with which the measurement of the same physical quantity agrees with is another.

Consider an example to differentiate between precision and accuracy.

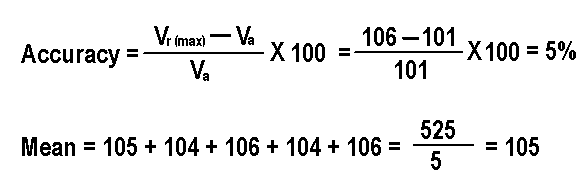

Let the known voltage be 101 volts with a certain meter, then five readings are taken which indicate 105, 104, 106, 104, and 106 volts. From these values the accuracy of an instrument is

While precision is ± 1% because the maximum deviation (106) from the mean reading (105) is only 1 volt. So the instrument could be calibrated so that it could be used to dependably measure voltages within ±1 VOH. From this, it is clear that accuracy can be improved but not beyond the precision of the instrument by calibration.

Resolution

Resolution is also called discrimination. and is defined as the smallest increment of the input signal that a measuring system is capable of displaying.

Threshold

If the instrument input is increased very gradually from zero, there will be some minimum value below which no output change can be detected. This minimum value defined the threshold of the instrument. The main differences between threshold and resolution are :

- Thus resolution is defined as the smallest measurable input change. While threshold defines the smallest measurable input.

- The threshold is measured when the input is varied from zero while the resolution is measured when the input is varied from any arbitrary non-zero value.

Reproducibility

Wherever exact quality of control is required, this is the major requirement of the instruments. It is defined as the degree of closeness with which the same value of a variable may be measured at different times.

Reproducibility is affected due to several factors such as the drift in the calibration of a thermocouple at high temperature due to contamination. Periodic checking and maintenance of instruments are generally done to obtain reproducibility. Perfect reproducibility means that an instrument has no drift.

Calibration

Any measuring system must be provable, i.e., it must prove its ability to measure reliably. The procedure adopted for this is called ‘calibration’.

When the system is prepared to measure quantities, known values of the input quantities are felt by the system, and the corresponding outputs are measured. A graph relating the output with input is plotted and is known as a ‘Calibration Graph’.

The procedure involves the comparison of a particular instrument with either:

- a primary standard

- secondary standard with a higher accuracy than the instrument to be calibrated

- A known input source.

Traceability

This is the concept of establishing a valid calibration of measuring instruments. or measurement standard by step-by-step comparison with better standards up to an accepted or specified standard.

Response Time

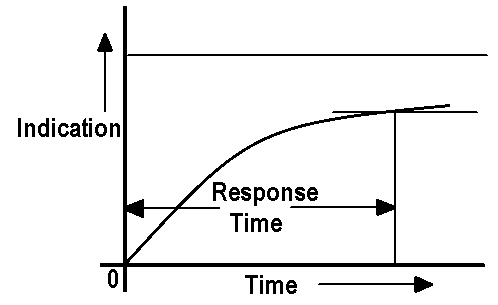

It is the time that elapses after a sudden change in the measured quantity until the instrument gives an indication differing from the true value by an amount less than a given permissible error.

Bias

It is the characteristic of a measuring instrument to give indications of the value of a measured quantity whose average differs from the true value of that quantity.

Bias errors are due to the algebraic summation of all the systematic errors affecting the indication of the instrument. The main sources of bias are maladjustment instruments of the instrument, permanent sets, non-linearity errors, errors of material measures such as capacity measures, gauge blocked, etc.

Inaccuracy

It is the total error of a measure or measuring instrument under specified conditions of use including bias and repeatability errors.

Inaccuracy is specified by two limiting values obtained by adding and subtracting to the bias errors the limiting value of the repeatability errors.

If the known systematic errors are corrected, the remaining inaccuracy is due to the random errors and the residual systematic error that also has a random character. This inaccuracy is called uncertainty of measurement.

Above all are important terms that are used in mechanical measurements.

Hey, if you like the article on mechanical measurements share it with your friends. If you have any questions leave a comment.

Want free PDFs direct to your inbox? Then subscribe to our newsletter.

Read also:

- Micrometre Screw Gauge and Types OF Micrometers [The Complete Guide]

- Vernier Caliper [Complete Guide] Vernier Caliper Types, Parts, Errors, Advantages and more.

Read about machines: